The integration of Large Language Models (LLMs) into software development is a significant leap forward, promising to streamline various aspects of coding and problem-solving. Furthermore, they arguably have the ability to turn us all into software engineers – not necessarily good ones but developers nonetheless. Whatever your stance is on this innovation, they brings forth a critical question: how can software development teams effectively blend the advanced capabilities of AI with the invaluable expertise of human developers?

“we are at a pivotal moment where we programmers need to understand and appropriate these technologies, on our own terms, before capitalism does it for us.” – Manuel Odendahl (dev.to article)

The Role of LLMs in Software Development

LLMs, like OpenAI’s GPT series, have ushered in a new era of automated coding and problem-solving tools. These models can generate code snippets, assist in debugging, and streamline documentation processes. However, their integration into software development must be handled with care, balancing AI’s efficiency with human developers’ critical thinking and contextual understanding.

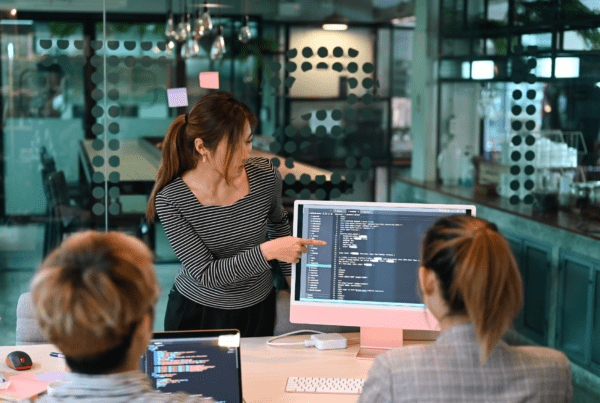

Augmenting Human Capabilities with LLMs

To effectively integrate LLMs, they should be viewed as tools that augment, rather than replace, human capabilities. LLMs can handle repetitive tasks or generate initial drafts, but human developers are needed to refine, optimize, and validate these outputs. Tools like Deepchecks and Encord can be instrumental here, providing continuous evaluation and monitoring of LLMs, ensuring that their outputs meet the expected quality and accuracy standards.

Ensuring Ethical and Bias-Free Development

Ethical considerations and potential biases in AI-generated code are significant concerns. It’s crucial to utilize tools like Giskard, which automatically detects vulnerabilities and risks, including biases and misinformation. This helps maintain ethical integrity and robustness in AI-assisted software development.

Best Practices for Integrating LLMs in Development Workflows

- Plan Ahead: Don’t just jump right into prompting for your code. It’s crucial to plan the project’s architecture, schemas and integrations. Providing the AI with complete context allows it to grasp the entirety of the project – without this it can produce inaccurate responses which can be a waste of time and a source of frustration.

- Continuous Testing and Monitoring: Continuous testing and monitoring of LLM outputs are essential. Tools like Deepchecks offer solutions to ensure that AI-generated code adheres to quality standards and remains free from biases or errors.

- Error Detection and Correction: Tools such as Giskard can be integrated directly into development notebooks to identify vulnerabilities, enhancing the performance, fairness, and reliability of AI models in real-time.

- Feedback Loop: Establish a feedback loop where developers can contribute to the improvement of LLMs. This helps these models to learn and adapt over time, better aligning with specific development needs.

Integrating LLMs into software development workflows offers an exciting opportunity to boost efficiency and foster innovation. However, this requires a balanced approach that combines AI’s computational power with human developers’ nuanced understanding and expertise. By leveraging tools that test the frameworks of ML models before and during deployment, teams can ensure that their use of AI in software development is effective, ethical, and aligned with the highest standards of quality and reliability.