To answer this let’s have a look at one of the more prominent software engineering companies in the world: Google.

In the 2020 book “Software Engineer at Google: Lessons Learned from Programming Over Time” published by O’Reilly Media, members of the Google team cover topics of Software Engineering, including a very forward-thinking chapter on the importance of measuring engineering productivity.

The chapter begins:

“Google is a data-driven company. We back up most of our products and design decisions with hard data. The culture of data-driven decision making, using appropriate metrics, has some drawbacks, but overall, relying on data tends to make most decisions objective rather than subjective, which is often a good thing. Collecting and analyzing data on the human side of things, however, has its own challenges. Specifically, within software engineering, Google has found that having a team of specialists focus on engineering

productivity itself to be very valuable and important as the company scales and can leverage insights from such a team.”

The engineering productivity research team consists of software engineers and social scientists from a variety of fields, including cognitive psychology and behavioural economics. Thus incorporating the study of the ‘human side’ of software development (inc. personal motivations, incentive structures and strategies for managing complex tasks) as well as the software artifacts produced.

Before setting out to measure productivity, it is imperative to ensure that the effort in time and resources (and therefore expenses) are worth it. Of stakeholders, the team ask a series of concrete questions to derive benefit from the process.

The questions include:-

1. What result are you expecting, and why?

2. If the data supports your expected result, will appropriate action be taken?

3. If we get a negative result, will appropriate action be taken?

4. Who is going to decide to take action on the result, and when would they do it?

These questions clarify whether measuring the impact of a tool or process on productivity is the right thing to do at this moment in time. And if the answers indicate that it is not, that is OK, and they move on. If it is deemed worthwhile i.e. giving a stakeholder the data they need to make an informed decision, the next stage is to determine what metrics to use.

At Google, the Goals/Signals/Metrics (GSM) framework is used.

A goal is a desired end result. It’s phrased in terms of what you want to understand at a high level and should not contain references to

specific ways to measure it.

A signal is how you might know that you’ve achieved the end result. Signals are things we would like to measure, but they might

not be measurable themselves.

A metric is proxy for a signal. It is the thing we actually can measure. It might not be the ideal measurement, but it is something that we

believe is close enough.

This framework prevents the ‘streetlight effect’, a term that comes from the phrase ‘looking for your keys under the streetlight’. In other words, it forces researchers to consider metrics beyond the obvious, readily available ones. As it’s goal driven ultimately, the emphasis is on the metrics that will actually help achieve the goals set out at the start.

“The important thing is to maintain traceability. For each metric, we should be able to trace back to the signal that it is meant to be a proxy for and to the goal it is trying to measure. This ensures that we know which metrics we are measuring and why we are measuring them.”

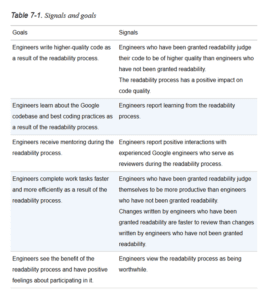

The chapter in the Google Software Engineering book studies the example put forward by the C++ and Java language teams at Google: readability. Since Google’s early days these teams have managed the readability process. It is expensive and causes frustration amongst some engineers as it’s deemed an ‘archaic hazing process’. So the original question posed by the language teams was “Is the time spent of the readability process worthwhile?” Some signals therefore were measured by analysing tools and code logs. Others are only able to be measured by asking engineers directly. Thereby using a mixture of quantitative and qualitative metrics. The table below shows some example signals for the goals of the readability process measurement.

The Google research team divides productivity into five core components named QUANTS: quality of the code, attention from engineers, intellectual complexity, tempo and velocity, and satisfaction. The full and complete list of metrics with their corresponding signals and goals can be reviewed in the full chapter: – Software Engineering at Google (abseil.io)

After analysis of all the data, the team creates recommendations that are built into the developer workflow and incentive structures. Additional training and documentation may be a necessary outcome, but positive change is more likely if it becomes a daily habit for the developers. In the case of the readability process, the study showed that it was overall worthwhile maintaining. Engineers who achieved readability learned from the process and had their code reviewed faster and submitted it faster. The study did show that there were places for improvement with the process; engineers were able to communicate the pain points and blockers and the language teams improved the tooling and process to make it faster and more transparent. Resulting in a better experience for the engineers.

Conclusions

It is clear that in a company such as a Google that is growing quickly and has the resources to staff a team of engineering productivity specialists, there are huge benefits of having a team of engineering productivity specialists. Measuring engineering productivity can tell companies and stakeholders what motivates and demotivates individuals, where inefficiencies lie in the engineering processes and solutions to the identified problems. This means that companies can grow and respond to demands without unnecessarily increasing their engineering overheads in terms of personnel.

However, it is also apparent from this research that small and medium sized companies will struggle to justify conducting studies like this, let alone have a dedicated team to carry them out. Therefore, it is extremely important that larger companies like Google share their research practices and results.